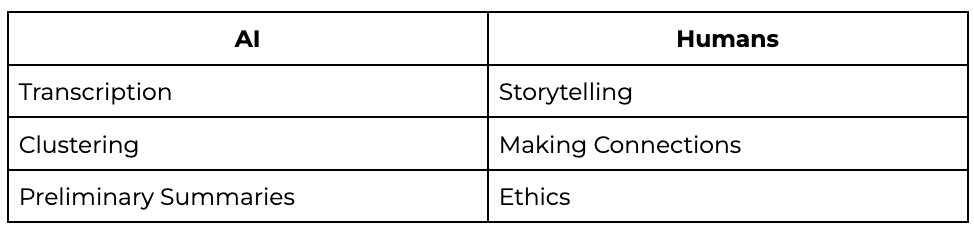

Where AI adds value vs. where human researchers are irreplaceable

•

•

AI, the collaborator we didn’t see coming.

For years, the debate was whether AI would replace us. The truth is, it’s already working beside us. It has not taken the researcher's seat. Far from it. But it is quietly fuelling the tools that help us analyse and scale our reach faster. The question isn’t whether AI will take over; it’s how we make sure it adds value without diluting the human input that makes research meaningful.

AI is not of the future; it is an integral part of our everyday workflow. Whether it be tagging tools or generative text assistants, it's really changing how research is designed, executed, and analysed.

Teams that once spent days synthesising feedback now do so in hours. Insights previously out of reach can now be surfaced instantly. But with these advancements come new tensions. Specifically around speed versus sensitivity and automation versus authenticity.

The sad truth is, AI is only as good as what it has been taught. Researchers hold a big responsibility for discernment while treading this fine line to make sure that efficiency does not override empathy when making decisions, especially when the AI system's decisions are actively influencing users' experiences.

The goal isn't to resist the technology but to learn how to partner with it in a manner that preserves the fundamentals of human understanding.

AI shines in the tasks that demand speed, repetition, and scale. It can sift through thousands of open-text responses in just minutes, group feedback into clear themes, and even predict sentiment, sometimes before we’ve opened our morning emails. That kind of power removes a huge burden from us because, instead of being overwhelmed by data, we get to focus more on what really matters, which is trying to make sense of stories behind data.

Taking this into consideration, AI enabled by either NLP or machine learning algorithms is capable of extracting insights, which would have otherwise taken days, weeks, or even longer to extract manually. When I’ve worked on projects alone, AI has felt like the teammate I didn’t have but definitely needed. From categorising responses, flagging patterns, to helping me move from raw data to story-building faster. That speed doesn’t just save time; it gives us headspace to think strategically. Which is sometimes a key part of working as a researcher, in some cases more than the research itself.

Where AI also excels is in scalability. Surveys that used to reach hundreds can now reach thousands with automated analysis layered in. Predictive models can highlight emerging trends or anomalies in seconds, making it easier to spot shifts in behaviour early. For cross-functional teams, that kind of accessibility levels the playing field. It democratises research, allowing product and design teams to engage with insights without bottlenecks.

But—and it’s a big but—AI’s strength lies in recognition, not reasoning. It can spot what’s common but struggles with what’s nuanced. That’s where humans remain irreplaceable.

Do this next: Use AI to speed up your data handling, but don’t skip the human review. Run one analysis with AI and another manually. Compare what each captures and misses. The differences will tell you where your human value really lies.

Askable is the modern research platform for velocity-obsessed teams.

Let's chatAI might help us find the ‘what’, but humans uncover the ‘why’. Empathy, context, and cultural sensitivity are the currency of research, and they can’t be automated. When participants hesitate before answering or when a tone shifts mid-interview, those are the exact moments that are invisible to algorithms.

What sets humans apart is our ability to notice the less obvious. We interpret nuance and can sense when something’s off. We don’t just read the data, we understand the story behind it. That’s why our questions tend to dig deeper. We see when the numbers point to systemic gaps or when some perspectives are missing altogether. Ethics and inclusion? They don’t come from algorithms. They come from human discernment.

People thrive on creativity.

AI can simulate creativity, but it can’t originate it. Its “out of the box” thinking still happens within the boundaries of its training data. It generates ideas by remixing what it already knows, which is useful, but not revolutionary.

That’s why when it comes to concept generation, human imagination is irreplaceable. We’re the ones who see gaps and opportunities AI doesn’t even know to look for.

Do this next: Next time you run a co-creation session, test this balance. Use AI to generate an initial set of ideas, then facilitate a live human session around the same challenge. Compare the outcomes…which are more novel, feasible, or emotionally resonant? The contrast is usually striking.

Get a sneak peak into the product, and everything Askable can do for you.

Contact salesThere’s a growing trend toward AI-generated participants (and this could be a blog post in itself…). Virtual humans are trained on synthetic or amalgamated data, but this approach risks hollowing out what makes research human. These models are reflections of datasets, not people. If the data used to create them is biased (and a lot of it is), then the participants they simulate will take on all of those biases too.

Talking to real people, messy, inconsistent, emotional, and surprising, is what grounds us in reality. It’s where insight becomes empathy. If we’re going to contend with bias, I’d rather it come from living, breathing humans than from an algorithmic projection of them.

Do this next: If you’re tempted to test synthetic participants, run a small comparison: conduct one short study with real participants and another with AI-generated ones. Compare not just the themes, but the texture of the insights. How did empathy, surprise, and nuance differ? Most researchers find that human responses are far richer and often challenge assumptions AI fails to question.

e. The real opportunity: collaboration, not competition

The future of research isn’t human versus AI. It’s human with AI. The most effective researchers will be those who understand how to harness automation without outsourcing thinking. AI can handle the mechanical parts, while humans lead the interpretation.

A healthy partnership means giving AI tasks that amplify our impact, not diminish it. That means building workflows where tools take care of efficiency so we can focus on empathy, strategy, and design influence. In doing so, we future-proof not only our roles but the craft of research itself.

Try this: If you use AI to support analysis, take one project and manually code a subset of responses yourself. Then compare your themes to the AI’s output. You’ll probably notice that while AI gets the main patterns right, it misses subtle contextual details. Feed those missed insights back into the model (if possible) or document them for your team. Over time, this kind of feedback loop trains both the AI and the humans to be more aware of what gets lost in automation.

MIT Sloan (2025) found that AI is least likely to replace work tasks that depend on capacities such as empathy, judgment, ethics, and hope.

Nielsen Norman Group (2024) observed that AI-driven clustering can identify broad themes effectively but still misses “the nuance and emotional undertone that define user experience.”

Stanford HAI (2025) noted that as AI tools expand as a capability, ethical guardrails and human oversight remain the most cited requirement for people to consider insight generation as trustworthy.

So after all of that, where does that leave us? AI is likely here to stay. So, for us, the future of research lies not in choosing sides but in developing a partnership that plays to both strengths.

Speed and scale…AI can handle that. Creativity and empathy…leave that to us. The more we use AI to remove friction from the process, the more space we create for those non-restrictive ideas to form outside of the bounds of trained LLMs.

If speed is AI’s superpower, ethics and imagination are ours. The barometer that ensures research remains grounded in reality.